OpenCV Python 강좌 - 동영상의 배경 제거하고 움직이는 물체 검출 하기 ( Background Subtraction )OpenCV/OpenCV 강좌2023. 12. 10. 07:34

Table of Contents

반응형

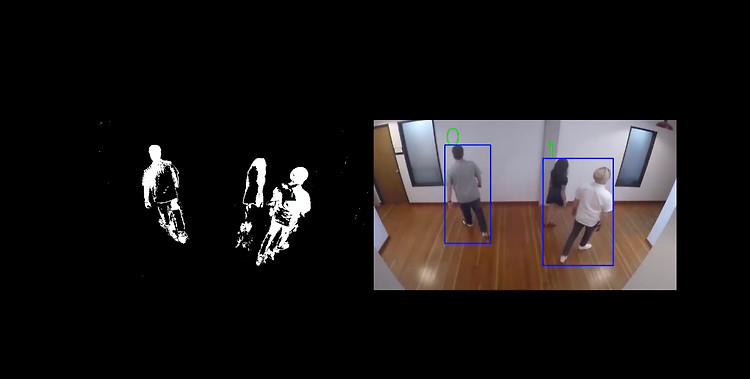

동영상의 배경을 제거하고 움직이는 물체를 검출하는 데 사용할 수 있는 Background Subtraction 예제 입니다.

OpenCV에서 제공하는 Background Subtraction 알고리즘 중 하나인 createBackgroundSubtractorKNN를 사용하여 테스트를 진행해봤습니다.

2018. 9. 22 최초작성

2023. 12. 10 최종작성 - 박스를 하나로 묶어주는 코드 추가

OpenCV Python - Background Subtraction 테스트 영상

| import cv2 import numpy as np import os, time def get_points(box): x, y, w, h = box return [(x, y), (x + w, y), (x, y + h), (x + w, y + h), ((2*x + w)/2, y), (x, (2*y + h)/2), ((2*x + w)/2, y + h), (x + w, (2*y + h)/2)] def combine_boxes(bounding_boxes, groups): combined_boxes = [] for group in groups: min_x = min([box[0] for box in group]) min_y = min([box[1] for box in group]) max_x = max([box[0] + box[2] for box in group]) max_y = max([box[1] + box[3] for box in group]) combined_boxes.append((min_x, min_y, max_x - min_x, max_y - min_y)) return combined_boxes def is_adjacent_or_overlap(box1, box2): x1, y1, w1, h1 = box1 x2, y2, w2, h2 = box2 if x1 <= x2 + w2 and x1 + w1 >= x2 and y1 <= y2 + h2 and y1 + h1 >= y2: return True elif x2 <= x1 + w1 and x2 + w2 >= x1 and y2 <= y1 + h1 and y2 + h2 >= y1: return True return False def remove_overlaps_or_adjacent(bounding_boxes): removed_boxes = bounding_boxes.copy() for box1 in bounding_boxes: for box2 in bounding_boxes: if box1 != box2 and is_adjacent_or_overlap(box1, box2): if (box1[2] * box1[3]) < (box2[2] * box2[3]): if box1 in removed_boxes: removed_boxes.remove(box1) else: if box2 in removed_boxes: removed_boxes.remove(box2) return removed_boxes def group_boxes(bounding_boxes, eps): groups = [] grouped = set() # to keep track of boxes already grouped for box in bounding_boxes: if box not in grouped: # ensure the box is not already grouped added = False for group in groups: for existing_box in group: if np.any([np.linalg.norm(np.array(point1)-np.array(point2)) <= eps for point1 in get_points(box) for point2 in get_points(existing_box)]): group.append(box) grouped.add(box) # mark the box as grouped added = True break if added: break if not added: groups.append([box]) grouped.add(box) # mark the box as grouped return groups def process_boxes(bounding_boxes, eps): groups = group_boxes(bounding_boxes, eps) combined_boxes = combine_boxes(bounding_boxes, groups) return remove_overlaps_or_adjacent(combined_boxes) # 테스트 동영상 출처 - https://github.com/intel-iot-devkit/sample-videos/blob/master/people-detection.mp4 cap = cv2.VideoCapture("people-detection.mp4") # 웹캠에서 fps 값 획득 fps = cap.get(cv2.CAP_PROP_FPS) print('fps', fps) if fps == 0.0: fps = 30.0 time_per_frame_video = 1/fps last_time = time.perf_counter() width = 1920#int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) *2 height = 1080#int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) fourcc = cv2.VideoWriter_fourcc(*'XVID') BASE_DIR = os.path.dirname(os.path.abspath(__file__)) writer = cv2.VideoWriter(BASE_DIR + '/' + 'result.avi', fourcc, fps, (width, height)) # 옵션 설명 https://stackoverflow.com/a/43636256 fgbg = cv2.createBackgroundSubtractorKNN(history=5, dist2Threshold=400, detectShadows=0) fhd = np.zeros((1080,1920,3), dtype=np.uint8) while(1): ret, frame = cap.read() if ret==False: break # width = frame.shape[1] # height = frame.shape[0] fgmask = fgbg.apply(frame) nlabels, labels, stats, centroids = cv2.connectedComponentsWithStats(fgmask) bbox_list = [] for index, centroid in enumerate(centroids): if stats[index][0] == 0 and stats[index][1] == 0: continue if np.any(np.isnan(centroid)): continue x, y, w, h, area = stats[index] centerX, centerY = int(centroid[0]), int(centroid[1]) if area > 100: # cv2.circle(frame, (centerX, centerY), 1, (0, 255, 0), 2) # cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 0, 255)) bbox_list.append((x,y,w,h)) # 그룹 생성 processed_boxes = process_boxes(bbox_list, 50) for i, box in enumerate(processed_boxes): x,y,w,h = box cv2.putText(frame, str(i), (x,y), cv2.FONT_HERSHEY_SIMPLEX, 2, (0,255,0), 2 ) cv2.rectangle(frame, box, (255, 0, 0), 2) fgmask = cv2.cvtColor(fgmask, cv2.COLOR_GRAY2BGR) result = cv2.hconcat([fgmask, frame]) h,w,_=result.shape center_y=(height-h)//2 center_x=(width-w)//2 fhd[center_y:center_y+h, center_x:center_x+w] = result cv2.imshow('result',result) writer.write(fhd) k = cv2.waitKey(30) & 0xff if k == 27: break cap.release() writer.release() cv2.destroyAllWindows() |

참고

https://docs.opencv.org/3.4.3/db/d5c/tutorial_py_bg_subtraction.html

반응형

'OpenCV > OpenCV 강좌' 카테고리의 다른 글

| OpenCV Python kmeans 예제 (0) | 2024.04.10 |

|---|---|

| OpenCV Python inpaint 함수 : 손상된 이미지 복원(Image Inpainting) (0) | 2024.04.04 |

| OpenCV 빌드 후 ModuleNotFoundError: No module named 'cv2' 에러 해결 (0) | 2023.10.28 |

| OpenCV Python 동영상의 전체 프레임수를 알아내는 방법 (0) | 2023.10.28 |

| IOU Python 예제 코드 (0) | 2023.10.28 |