SIFT와 XFeat 사용해보기

카메라가 빨리 움직일때 SIFT보다 성능이 우수하다고 하는 XFeat를 사용해봤습니다. 지금은 이미지 테스트만 진행한 상황이며 실시간 테스트는 조만간 해보겠습니다.

2024. 7. 28 최초작성

2024. 7. 28 실시간 테스트를 추가했습니다.

참고

https://github.com/verlab/accelerated_features

https://docs.opencv.org/4.x/dc/dc3/tutorial_py_matcher.html

다음 포스트에 나온대로 conda 환경을 구성후 하는게 좋습니다.

Visual Studio Code와 Miniconda를 사용한 Python 개발 환경 만들기( Windows, Ubuntu, WSL2)

https://webnautes.tistory.com/1842

이제 XFeat를 테스트하기 위한 환경을 구성합니다

Xfeat 깃허브 저장소를 다운로드 합니다.

git clone https://github.com/verlab/accelerated_features.git

cd accelerated_features

파이썬 가상환경을 생성하고 활성화합니다.

conda create -n xfeat python=3.8

conda activate xfeat

GPU 사용 여부에 따라 다음 두 명령 중 하나를 사용하세요. 글 작성 시점에서 Pytorch 2.4가 설치되었습니다.

# GPU를 사용

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

# CPU를 사용

pip install torch==1.10.1+cpu -f https://download.pytorch.org/whl/cpu/torch_stable.html

필요한 패키지를 설치합니다.

pip install opencv-contrib-python tqdm matplotlib

현재 폴더에서 코드를 실행해야 XFeat 모듈을 사용할 수 있습니다.

(xfeat) webnautes@webnautes-laptop:~/accelerated_features$ pwd

/home/webnautes/accelerated_features

현재 위치에 vscode를 실행한후, 다음 코드를 실행합니다.

code .

| import numpy as np import os import torch import tqdm import cv2 import matplotlib.pyplot as plt import numpy as np from modules.xfeat import XFeat xfeat = XFeat() # # XFeat에서 제공하는 이미지를 사용합니다. # im1 = cv2.imread('./assets/ref.png') # im2 = cv2.imread('./assets/tgt.png') # 이미지를 선택하여 사용합니다. im1 = cv2.imread('1.png') # 찾을 물체 사진 im2 = cv2.imread('2.png') # 물체가 포함된 사진 def warp_corners_and_draw_matches(ref_points, dst_points, img1, img2): # Calculate the Homography matrix H, mask = cv2.findHomography(ref_points, dst_points, cv2.USAC_MAGSAC, 3.5, maxIters=1_000, confidence=0.999) mask = mask.flatten() # Get corners of the first image (image1) h, w = img1.shape[:2] corners_img1 = np.array([[0, 0], [w-1, 0], [w-1, h-1], [0, h-1]], dtype=np.float32).reshape(-1, 1, 2) # Warp corners to the second image (image2) space warped_corners = cv2.perspectiveTransform(corners_img1, H) # Draw the warped corners in image2 img2_with_corners = img2.copy() for i in range(len(warped_corners)): start_point = tuple(warped_corners[i-1][0].astype(int)) end_point = tuple(warped_corners[i][0].astype(int)) cv2.line(img2_with_corners, start_point, end_point, (0, 255, 0), 4) # Using solid green for corners # Prepare keypoints and matches for drawMatches function keypoints1 = [cv2.KeyPoint(p[0], p[1], 5) for p in ref_points] keypoints2 = [cv2.KeyPoint(p[0], p[1], 5) for p in dst_points] matches = [cv2.DMatch(i,i,0) for i in range(len(mask)) if mask[i]] # Draw inlier matches img_matches = cv2.drawMatches(img1, keypoints1, img2_with_corners, keypoints2, matches, None, matchColor=(0, 255, 0), flags=2) return img_matches # Use out-of-the-box function for extraction + MNN matching mkpts_0, mkpts_1 = xfeat.match_xfeat(im1, im2, top_k = 4096) canvas = warp_corners_and_draw_matches(mkpts_0, mkpts_1, im1, im2) plt.figure(figsize=(12,12)) plt.imshow(canvas[..., ::-1]), plt.show() # Use out-of-the-box function for extraction + coarse-to-fine matching mkpts_0, mkpts_1 = xfeat.match_xfeat_star(im1, im2, top_k = 8000) canvas = warp_corners_and_draw_matches(mkpts_0, mkpts_1, im1, im2) plt.figure(figsize=(12,12)) plt.imshow(canvas[..., ::-1]), plt.show() |

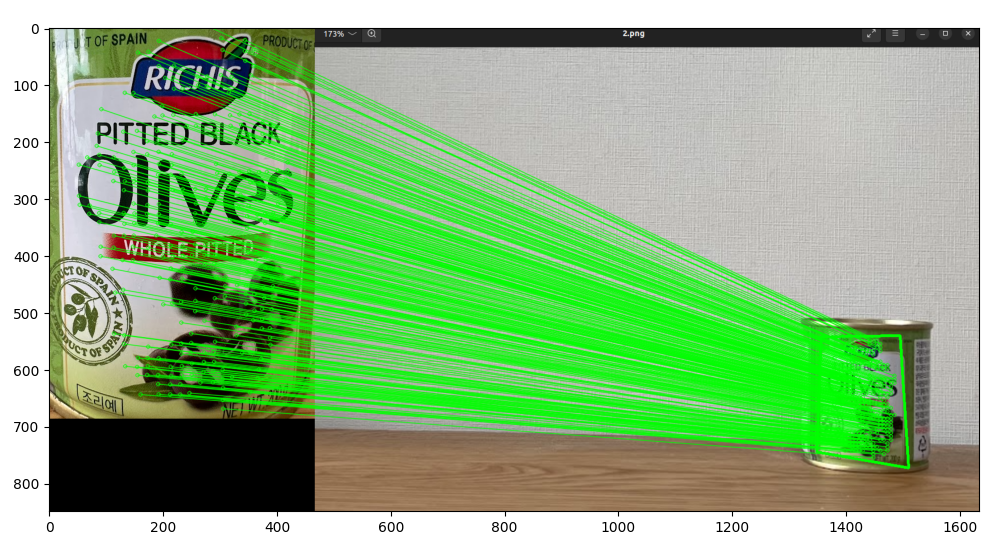

실행 결과입니다. 두번 보입니다.

지금은 OpenCV에 포함된 SIFT도 사용해봤습니다.

| import numpy as np import cv2 as cv import matplotlib.pyplot as plt img1 = cv.imread('1.png',cv.IMREAD_GRAYSCALE) # 찾을 물체 사진 img2 = cv.imread('2.png',cv.IMREAD_GRAYSCALE) # 물체가 포함된 사진 # Initiate SIFT detector sift = cv.SIFT_create() # find the keypoints and descriptors with SIFT kp1, des1 = sift.detectAndCompute(img1,None) kp2, des2 = sift.detectAndCompute(img2,None) # BFMatcher with default params bf = cv.BFMatcher() matches = bf.knnMatch(des1,des2,k=2) # Apply ratio test good = [] for m,n in matches: if m.distance < 0.75*n.distance: good.append([m]) # cv.drawMatchesKnn expects list of lists as matches. img3 = cv.drawMatchesKnn(img1,kp1,img2,kp2,good,None,flags=cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS) plt.imshow(img3),plt.show() |

실행 결과입니다. OpenCV에서 제공하는 코드인데 물체 위치까지 잡아주지는 않네요.

SIFT와 XFeat 비교결과입니다. 아래 영상은 XFeat 실시간 매칭 결과입니다.

SIFT와 XFeat 비교결과입니다. 아래 영상은 SIFT 실시간 매칭 결과입니다.

테스트에 사용한 코드입니다.

XFeat

| import numpy as np import os import torch import tqdm import cv2 import matplotlib.pyplot as plt import numpy as np from modules.xfeat import XFeat xfeat = XFeat() # # XFeat에서 제공하는 이미지를 사용합니다. # im1 = cv2.imread('./assets/ref.png') # im2 = cv2.imread('./assets/tgt.png') # 이미지를 선택하여 사용합니다. im1 = cv2.imread('1.png') # 찾을 물체 사진 # im2 = cv2.imread('2.png') # 물체가 포함된 사진 def warp_corners_and_draw_matches(ref_points, dst_points, img1, img2): # Calculate the Homography matrix H, mask = cv2.findHomography(ref_points, dst_points, cv2.USAC_MAGSAC, 3.5, maxIters=1_000, confidence=0.999) mask = mask.flatten() # Get corners of the first image (image1) h, w = img1.shape[:2] corners_img1 = np.array([[0, 0], [w-1, 0], [w-1, h-1], [0, h-1]], dtype=np.float32).reshape(-1, 1, 2) # Warp corners to the second image (image2) space warped_corners = cv2.perspectiveTransform(corners_img1, H) # Draw the warped corners in image2 img2_with_corners = img2.copy() for i in range(len(warped_corners)): start_point = tuple(warped_corners[i-1][0].astype(int)) end_point = tuple(warped_corners[i][0].astype(int)) cv2.line(img2_with_corners, start_point, end_point, (0, 255, 0), 4) # Using solid green for corners # Prepare keypoints and matches for drawMatches function keypoints1 = [cv2.KeyPoint(p[0], p[1], 5) for p in ref_points] keypoints2 = [cv2.KeyPoint(p[0], p[1], 5) for p in dst_points] matches = [cv2.DMatch(i,i,0) for i in range(len(mask)) if mask[i]] # Draw inlier matches img_matches = cv2.drawMatches(img1, keypoints1, img2_with_corners, keypoints2, matches, None, matchColor=(0, 255, 0), flags=2) return img_matches cap = cv2.VideoCapture('test.MOV') while True: ret, frame = cap.read() if not ret: break im2 = frame #Use out-of-the-box function for extraction + MNN matching mkpts_0, mkpts_1 = xfeat.match_xfeat(im1, im2, top_k = 14096) canvas = warp_corners_and_draw_matches(mkpts_0, mkpts_1, im1, im2) h,w,_ = canvas.shape canvas = cv2.resize(canvas, (int(w/2), int(h/2)), interpolation=cv2.INTER_AREA) cv2.imshow('ret', canvas) key = cv2.waitKey(1) if key == 27: break |

SIFT

| import numpy as np import cv2 as cv import matplotlib.pyplot as plt img1 = cv.imread('1.png',cv.IMREAD_GRAYSCALE) # 찾을 물체 사진 # img2 = cv.imread('2.png',cv.IMREAD_GRAYSCALE) # 물체가 포함된 사진 # Initiate SIFT detector sift = cv.SIFT_create() cap = cv.VideoCapture('test.MOV') while True: ret, frame = cap.read() if not ret: break img2 = cv.cvtColor(frame, cv.COLOR_BGR2GRAY) # find the keypoints and descriptors with SIFT kp1, des1 = sift.detectAndCompute(img1,None) kp2, des2 = sift.detectAndCompute(img2,None) # BFMatcher with default params bf = cv.BFMatcher() matches = bf.knnMatch(des1,des2,k=2) # Apply ratio test good = [] for m,n in matches: if m.distance < 0.75*n.distance: good.append([m]) # cv.drawMatchesKnn expects list of lists as matches. img3 = cv.drawMatchesKnn(img1,kp1,img2,kp2,good,None,flags=cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS) h,w,_ = img3.shape img3 = cv.resize(img3, (int(w/2), int(h/2)), interpolation=cv.INTER_AREA) cv.imshow('ret', img3) key = cv.waitKey(1) if key == 27: break |

테스트에 사용한 영상과 이미지입니다.